Google has won a contract with the Defense Innovation Unit (DIU) to use recent technological advancements to improve the accuracy of a cancer diagnosis. About five percent of diagnoses are made incorrectly, and in half of those cases, the incorrect diagnosis causes problems further down the road.

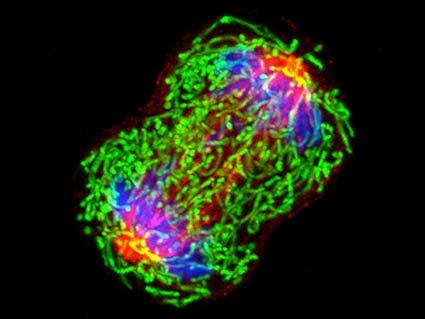

Google plans to train an AI using the open-source platform TensorFlow to detect cancerous cells in photos taken from a microscope. They’ve already started developing the neural networks by using the Google Cloud Healthcare API to de-identify and segment existing datasets. Once the AI is fully trained, they’ll design their own microscope with an integrated AR (augmented reality) overlay that shows physicians’ information about the likelihood of cells being cancerous.

To effectively treat cancer, speed and accuracy are critical,” said Mike Daniels, vice president of Google Cloud’s department for the Global Public Sector. “We are partnering with the DIU to provide our machine learning and artificial intelligence technology to help frontline healthcare practitioners learn about capabilities that can improve the lives of our military men and women and their families.

Google hopes that their method will reduce the “overwhelming volume of data” physicians face and will make the diagnoses faster and cheaper, as well as being more accurate. But widespread use of AI in medical practices is still a while away. The first shipment of microscopes will be to select the Defense Health Agency treatment facilities for research only. Afterward, the technology will roll out to the broader US Military Health System and Veteran’s Affairs hospitals for real-world trialing.